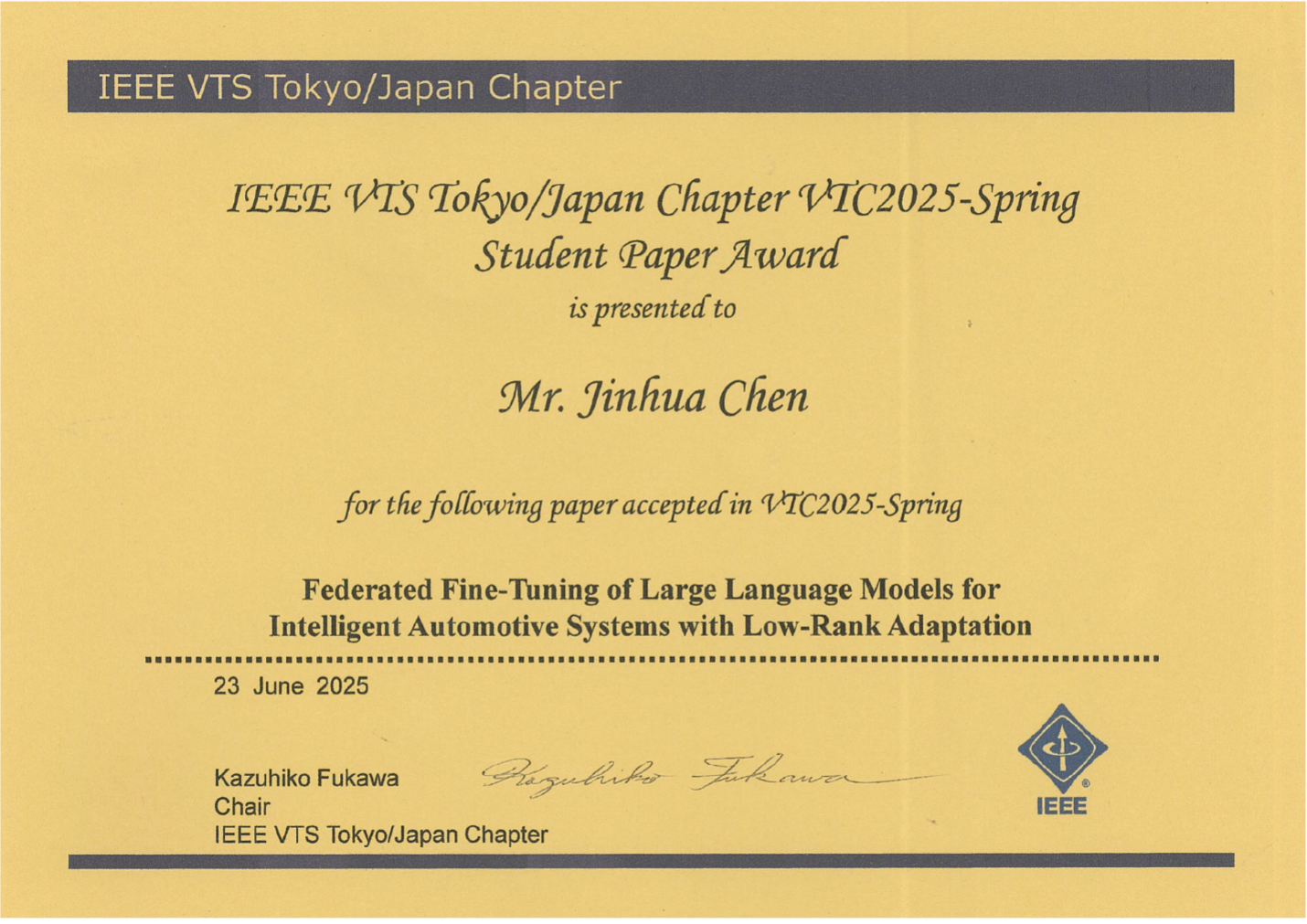

Jinhua Chen (majoring in Applied Informatics at the Graduate School of Science and Engineering) received the Student Paper Award at the IEEE VTS Tokyo/Japan Chapter 2025 IEEE 101st Vehicular Technology Conference (IEEE VTC2025-Spring).

・Winner

Jinhua Chen (second-year PhD student in Yu Lab, majoring in Applied Informatics at the Graduate School of Science and Engineering)

・Conference

The 2025 IEEE 101st Vehicular Technology Conference: IEEE VTC2025-Spring

・Date

June 17, 2025 ~June 20, 2025

・Awarded date

June 23, 2025

・Conference Venue

Oslo, Norway

・Award name

Student Paper Award

・Name of award-winning paper

Federated Fine-Tuning of Large Language Models for Intelligent Automotive Systems with Low-Rank Adaptation

・Summary of research

Large Language Models (LLMs) in intelligent automotive systems offer significant benefits, such as enhancing natural language understanding, improving user interaction, and enabling more intelligent decision-making. However, this integration also faces important challenges, including data heterogeneity, limited computational resources, and the critical need to safeguard user privacy. Federated Learning (FL) offers a promising solution by enabling decentralized training across distributed data sources without compromising privacy. This paper proposes a novel FL framework designed specifically for in-vehicle systems, addressing key challenges such as data heterogeneity and limited computational resources. Our method introduces a robust aggregation algorithm based on the L2 norm between LLM increments, effectively mitigating data inconsistencies and enhancing model generalization. Moreover, by integrating Low-Rank Adaptation (LoRA) within parameterefficient fine-tuning, the framework reduces computational and communication overhead while preserving privacy. Comprehensive experiments validate that the proposed method outperforms state-of-the-art FL methods, achieving a Vicuna score of 8.17, a harmless answer rate of 68.65% (Advbenchmark), and an MTBenchmark average score of 3.74. These results highlight the potential of the proposed FL-based LLM with LoRA frameworks in revolutionizing intelligent automotive systems through enhanced adaptability and privacy preservation.