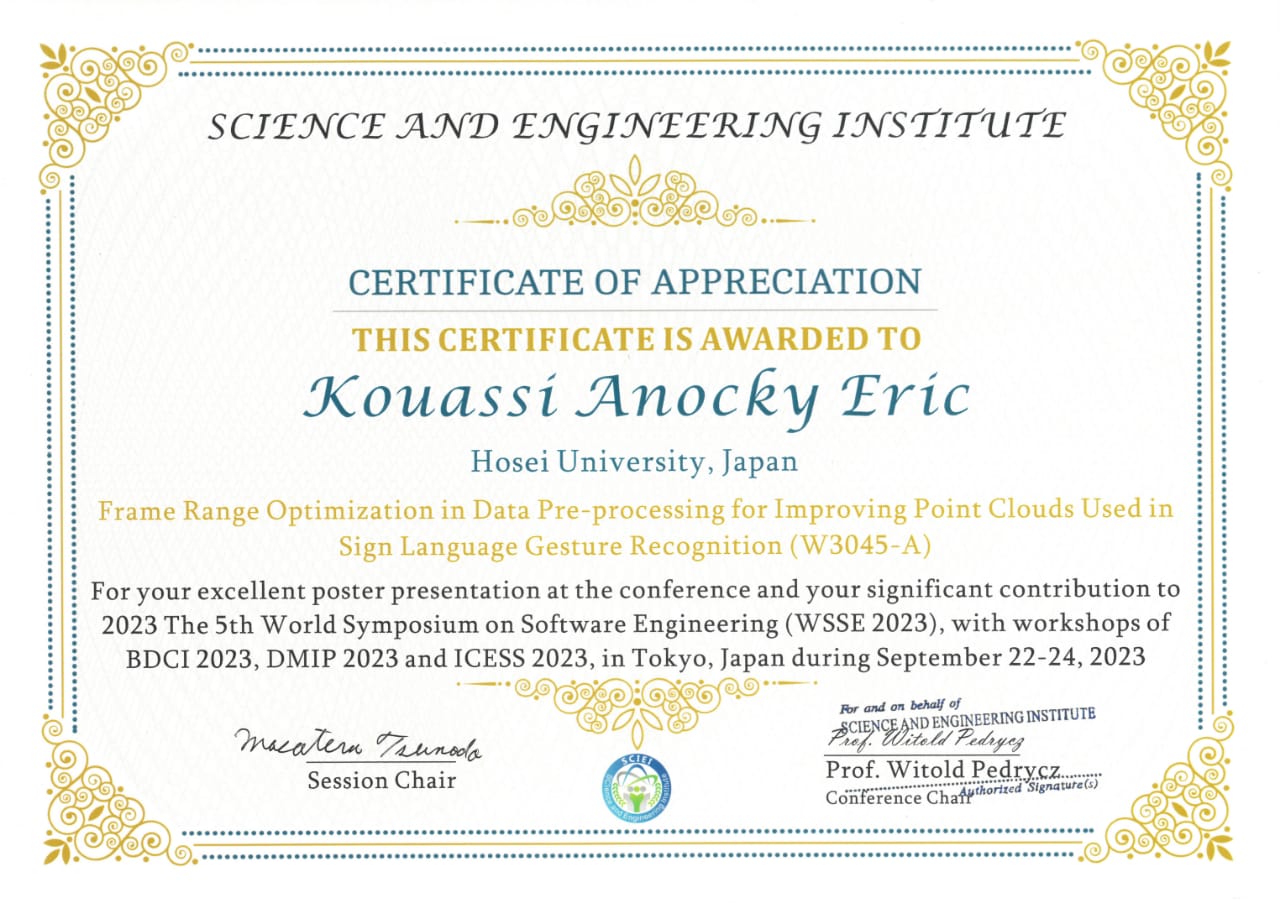

KOUASSI Anocky Eric Rene Raymond, major in Computer and Information Sciences in Graduate School of Computer and Information Sciences) won Certificate of appreciation of excellent poster presentation at The 5th World Symposium on Software Engineering(WSSE 2023, with Workshops on Big Data and Computational Intelligence(BDCI).

・Winner

KOUASSI Anocky Eric Rene Raymond(2nd year master’s student, major in Computer and Information Sciences, Graduate School of Computer and Information Sciences)

・Conference Name

The 5th World Symposium on Software Engineering(WSSE 2023, with Workshops on Big Data and Computational Intelligence(BDCI)

・Conference Period

22-24 Sep 2023

・Presentation and Awarded Date

22 Sep 2023

・Conference Venue

TKP Tokyo Station Conference Center

・Paper’s title

Frame Range Optimization in Data Pre-processing for Improving Point Cloud Used in Sign Language Gesture Recognition

・Authors

KOUASSI Anocky Eric Rene Raymond, Hosei University, 2nd year of Master’s course of Computer and Information Sciences

Kong Lin, Hosei University, 2nd year of Master’s course of Computer and Information Sciences

Runhe Huang, Professor of Hosei University, Faculty of Computer and Information Sciences

It is critical to use a reasonable frame range in the pre-processing data samples for obtaining better quality point cloud data to get better accuracy of sign language gesture recognition. This research is aimed at optimizing the frame range to the point clouds generated for American Sign Language (ASL). In this research, a non-contact device, an IWR6843AOP millimeter wave radar sensor is used to capture fifteen (15) ASL gestures, with about 800 samples per sign. The frame range initially varies from 11 to 120. An LSTM [3] model with this initial frame range was used to train with 15 gestures first resulting in 88.2 % overall accuracy. To further improve accuracy, it is important to optimize the frame range by using histogram distribution. This distribution represents the fused 15 gestures with approximately 800 samples per gesture versus the number of frames. To find an optimal frame range, algorithm 1 and algorithm 2 start at the center on the peak (47), expanding the frame number range to the left and right sides until at least 10500 data samples required for training fall in the range (30-54). To evaluate our algorithms, the LSTM and Bidirectional LSTM were trained with data within the obtained range yielding respective accuracy of 89.2% and 91.2 %. These results prove the effectiveness of our approach.